n8n Primer

What is n8n?

If you are familiar with apps like IFTTT (If This Then That) and Zapier, n8n’s basic premise should be no major surprise.

N8n is like Zapier, Make, IFTTT and others in the “No-Code Automation” space, but n8n is different in that you can self-host it. N8n is also on the cutting edge when it comes to AI agents and interoperability between all the various AI platforms these days.

To get start with a self-hosted n8n instance (in Docker with compose), it is quite straightforward. I would also consider adding a Qdrant container (local vector storage for AI Agent workflow) in the same compose/folder structure for ease of maintenance. Qdrant is essentially an efficient way for your AI Agents to interact with and store ‘memory’.

Here’s the compose file:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

services:

n8n:

image: docker.n8n.io/n8nio/n8n

container_name: n8n

restart: always

ports:

- 5678:5678

environment:

- N8N_HOST=XXupdate.this.to.yoursXX

- N8N_PORT=5678

- N8N_PROTOCOL=https

- NODE_ENV=production

- WEBHOOK_URL=https://XXupdate.this.to.yoursXX/

- GENERIC_TIMEZONE=XXupdate.this.to.yoursXX

- N8N_DEFAULT_BINARY_DATA_MODE=filesystem

volumes:

- ./n8n_data:/home/node/.n8n

- ./n8n_local:/files

# https://qdrant.tech/documentation/quickstart/

qdrant:

image: qdrant/qdrant

hostname: qdrant

container_name: qdrant

restart: unless-stopped

ports:

- 6333:6333

volumes:

- ./qdrant_storage:/qdrant/storage

volumes:

n8n_data:

external: true

If you have any problems with installing n8n, here is the docker-compose setup page. Similarly with Qdrant, here is the quickstart documentation

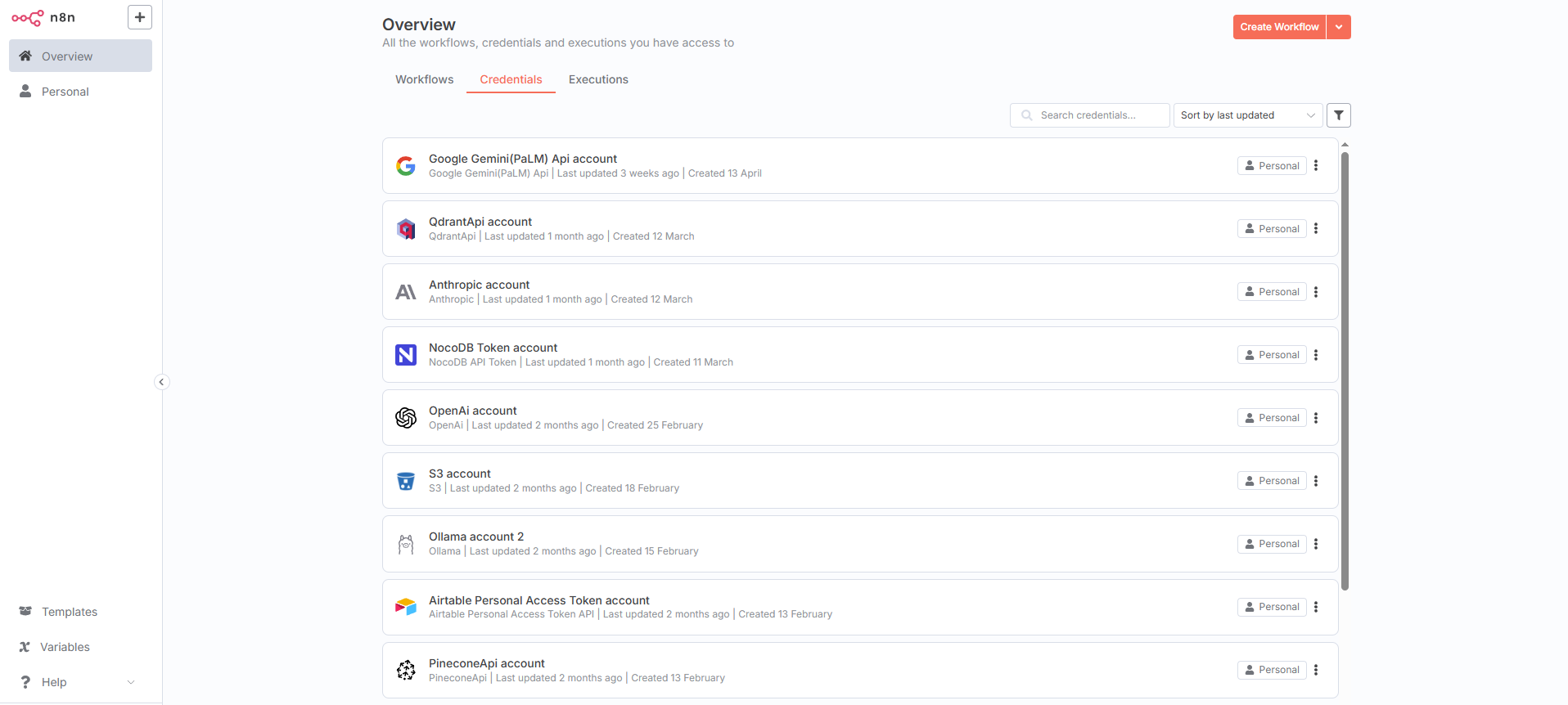

Once you are up and running, n8n is quite bare. The next step is to decide what you want to connect n8n to (OpenAI, Claude, etc.) and go grab the API keys/credentials for all the various services and load them into n8n.

Once you plug all the credentials into n8n, you can then start to play.

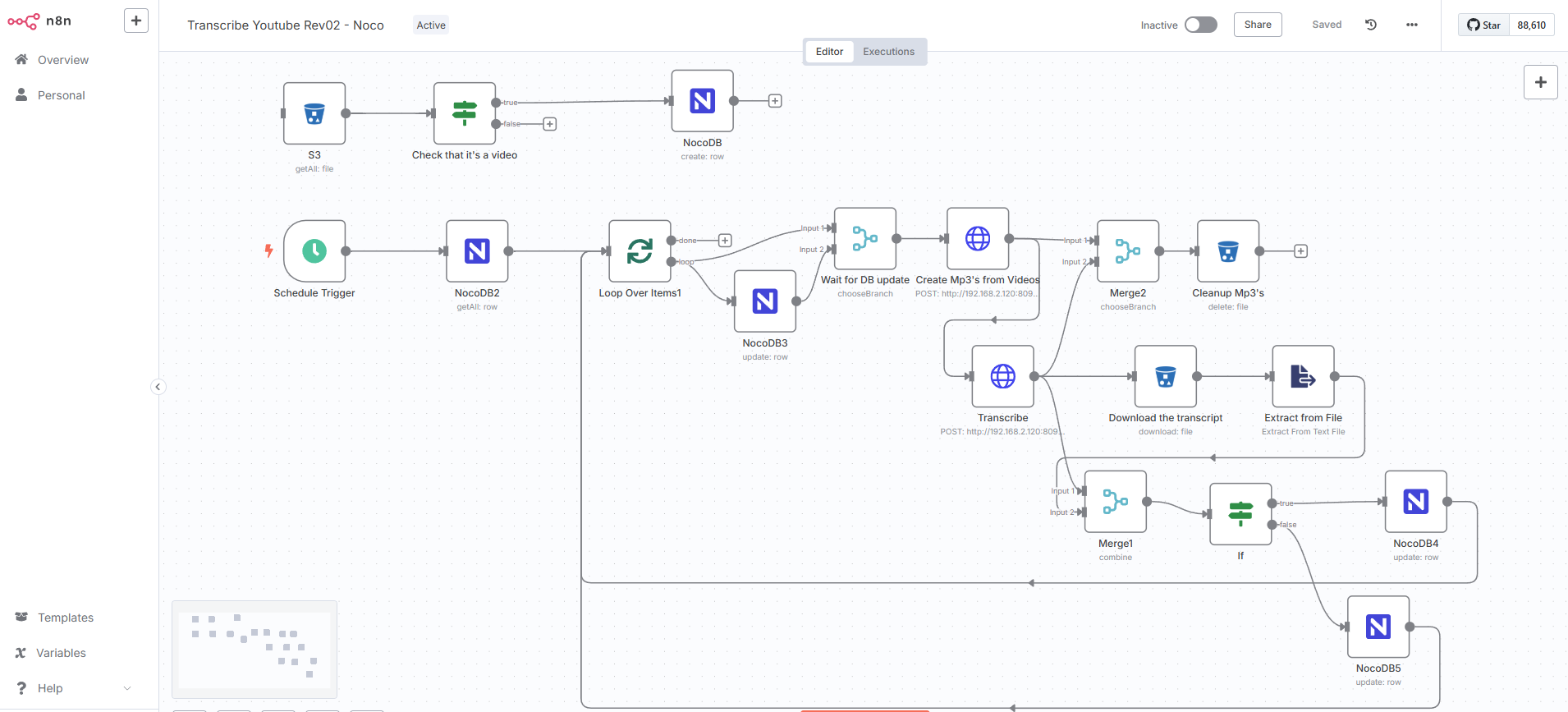

Workflows don’t have to be super complicated but they can get complicated depending on what you want.

I was looking to transcribe all my YT videos and then ask different AI agents to give me feedback on what to do next, as well as try to see how similar/dissimilar all the feedback from the different models were. I store all the data in a NocoDB (self-hosted airtable, essentially) and then interact with the NocoDB table with all the AI Agents.

As you can see, this workflow escalated quite a bit from just a simple: transcribe and feed into NocoDB.

In the end, I needed to also convert my .mp4 videos into .mp3’s that I could feed into a different docker container I run on my main desktop (nca-toolkit) to transcribe the script locally on my network using the OpenAI Whisper models.

Then there were some checks to pull not only the text from the transcripts but also the subtitle (.srt) and .txt subtitles to feed into NocoDB. All the paths reference a MiniIO instance that I also have setup locally via docker.

As I write this, I realize how much more digging and info was necessary to get started.

Anyone reading this please reach out to me in Discord and I am happy to help put you on the right path and add more info this post or subsequent posts to help you on your automation/AI journey.