Proxmox Adventures Towards Network Reliability

Starting Point

I had been having some problems with my internet dropping randomly (using PPPoE on Leaptel NBN), and after about a week of back and forth with the ISP (up to level 3 on the network team) I realized that I both:

- became a corner case to the ISP with CGNAT and some peculiarities with my NBN NTD box. They needed to turn CGNAT back on to fix my connectivity issue

- My OpnSense router (running on a ProtectLi Vault) is having some sort of weird hardware failures that cause it to randomly reboot and leave no logs.

- Switched out the power supply and that didn’t fix it

- Ran chkdsk, which caught some fixable things but no disk issues

- Attempted to run memtest86, which didn’t show any failures but doesn’t run for more than a few hours (reboots randomly)

The ProtectLi vault has been running for 38k hours judging by the SMART test on the disk, so it has had a semi-reasonable run of 4 years straight without issue.

I have been using OpnSense and HAProxy (High Availability Proxy) to expose a bunch of different services (including this blog) to the internet. With my ISP turning CGNAT back on, I no longer can expose ports like I use to. Internal HAProxy redirects still worked as expected.

During the troubleshooting with my ISP when they turned CGNAT back on, I said that was fine but it would take me several hours to switch to a solution that still allows me to host public services.

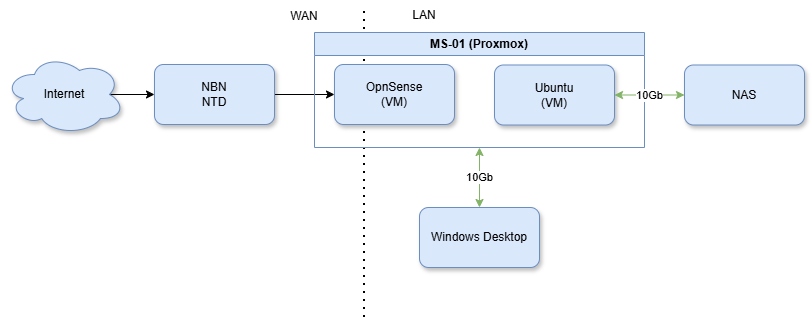

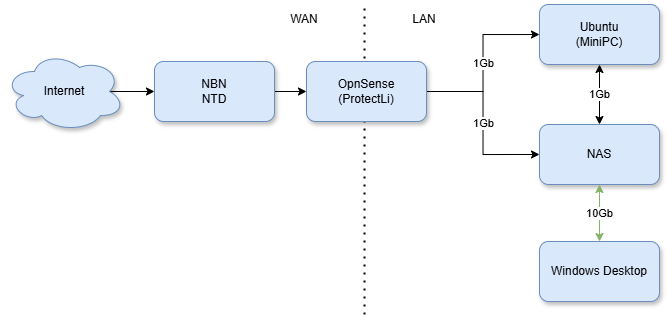

Here’s my original network layout:

Hosting through CGNAT

The simple answer to host through CGNAT is Cloudflared tunnels.

As expected, it took me several hours to migrate my services from HAProxy to Cloudflared (for external services). I did this via a docker-compose stack, so it is now very easy to expose new endpoints, I just need to setup DNS records and add the end service to the Cloudflared config.yaml file.

The Plan

With my main router causing issues, I made the decision to finally move to a more integrated solution with my main network stack. I have not played with much virtualization in ‘home production’ to date but thought this the perfect opportunity to take the plunge.

I decided that the open source version of Proxmox would be my hypervisor. I am almost exclusively on Linux (minus my main desktop) so a debian-based Proxmox is not that wild from a system level to me.

In a strike of luck in my moment of need, Minisforum currently has a sale on for their i9-13900H packed MS-01 workstation down where I live.

I bought the 32GB RAM + 1TB NVMe drive to start and expected that I could upgrade down the tracks when other things go on sale.

Fast forward to a few weeks later, I wish I had bought the barebones kit of the MS-01. I had some infant mortality of the NVMe drive (ZFS failure on a non-mirrored ZFS pool), and passing through the iGPU caused system instability over time with my Proxmox setup (random lock-ups of all 6 network interfaces). In the end I ended up buying 2 1TB NVMe drives for a mirrored ZFS pool, 64GB of DDR5 RAM, and an Nvidia RTX A1000 GPU mainly for video transcoding.

Updated Network Diagram

Migration

Moving from bare metal Ubuntu to virtualized via Proxmox was an interesting exercise. I used Synology Backup For Business to take a full system backup and restore it into a new VM within Proxmox. There was some initial EFI/UEFI disk challenges in the migration, but after leaning how to make a new EFI disk in Proxmox and use the correct network interface drivers (E1000) it worked quite quickly.

The biggest challenge I had in the Proxmox migration had to do with network interfaces. The MS-01 has 4 NICs built in and two spots for high speed USB-C based NICs. I ended up opting for all 6 NICs being used in the end. 2x 10GBe are used for a fabric between my NAS, Ubuntu VM, and Windows editing desktop. 2x 2.5GBe are used for Proxmox Management and the Ubuntu VM. 2x 5GBe USB-C NICs are used for Opnsense WAN and LAN.

It turns out that the 2x 2.5GBe NICs when used with FreeBSD (Opnsense) do not have the correct drivers to hand out DHCP leases, which was one of the crazier faults I experience troubleshooting in a loooong while. The interface was all happy but would not hand out leases. Switching OpnSense to the USB-C NICs very quickly fixed that issue and has been good since.

Post Migration

One of the main reasons I wanted to move to Proxmox was the ability to back things up in a more efficient and deduplicated fashion. Installing Proxmox Backup Server as a VM on the NAS via iSCSI proved quite easy to setup and now I have full faith I could recover quickly in the case that things break down over time.

Final Thoughts

Proxmox looks like it will be a very powerful way to manage my network going forward. I look forward to the ability to iterate on ideas much faster now that the ‘what if I break it’ concerns with several bare-metal hosts is gone. Worst case I roll back to last night’s snapshot and we are good to go.